Privacy & Productivity: Your November 2025 NYC AI Insider

AI is great... but we got to watch our data.

📚 ANNOUNCEMENT: Pioneering Minds AI Community Platform (BETA)

Hello our AI pioneers!

Do you want to discuss AI but couldn’t find your group? Do you want to build more but lacks ideas? Do share products and projects you’ve built with your peers? Do you have challenges you want to solve but don’t know how? Are you looking for jobs? Mentors? Investors?

What if I tell you, our Community Platform can help you to find the peers, mentors and resources you are looking for and grow yourself in the process?

Thanks to our exceptional team We built the platform to further our organization’s mission

Grow AI-Native Pioneers—professionals, founders, and scholars—who build, deploy, and govern AI that compounds human opportunity responsibly.

And it’s now open for beta access! Join the waitlist today! We want to make this as light hearted as possible, so as more pioneers sign up, we will unlock increasingly valuable rewards for raffle and resources for all members!

Cheers,

Nick Gu

President, Pioneering Minds AI

🌟 Featured Events

TRAE SOLO Hackathon: Build Reliable AI | Nov. 22, 9 AM-6:30 PM, Full day Hackathon session for audience of all backgrounds, with hands on workshop RSVP

Agents in Production - MLOps x Prosus | Nov 18, 2-8:30 PM, Virtual event with 30+ talks on deploying AI agents in production RSVP

The AI Advantage: Building and Funding Startups | Nov 14, 10 AM-1:30 PM, CBS Startups Week conference with founders and investors RSVP

📅 November Events: Your Complete NYC AI Calendar

November brings an unprecedented concentration of AI events to NYC. We’ve organized them by focus area to help you strategically plan your month.

🔧 Pioneering Minds Events

TRAE SOLO Hackathon: Build Reliable AI | Nov. 22, 9 AM-6:30 PM, Full day Hackathon session for audience of all backgrounds, with hands on workshop RSVP

🤖 Application

November Demo Day ft. Google Cloud | Nov 17, 6-9 PM, Showcase your latest AI demos and dive deep into LLMs with NYC’s best technical community RSVP

Unleash Your Productivity with Gemini AI | Nov 24, 3-4 PM, Comprehensive guide to leveraging Google’s Gemini AI for streamlining workflows RSVP

Responsible AI for Human Well-Being | Nov 11, 4-5:30 PM, Focus on wearables and digital healthcare AI applications at Stevens Institute RSVP

Agents in Production - MLOps x Prosus | Nov 18, 2-8:30 PM, Virtual event with 30+ talks on deploying AI agents in production RSVP

The AI Advantage: Building and Funding Startups | Nov 14, 10 AM-1:30 PM, CBS Startups Week conference with founders and investors RSVP

🛠️ Deep Dives

2025 UCL NeuroAI Annual Conference | Nov 5, 9:30 AM-5 PM, UCL conference bringing together neuroscience and AI researchers with keynote speakers RSVP

Bias in AI Reading Group | Nov 5, 11 AM-12 PM, Discussion on fairness issues in AI systems (Online) RSVP

AI/ML Conversations Meetup: Smart CPU Offloading | Nov 12, 5:30-7:30 PM, Monthly meetup on scalable LLM inference at Capital One Office RSVP

Panel on Building AI Responsibly | Nov 17, 6:30-9 PM, Discussion with Code with AI founders on safety-first AI development RSVP

🎭 Networking & Social

NYC AI Users - AI Enthusiasts Happy Hour | Nov 6, 7-10 PM, Social mixer with favorite former speakers at Smithfield Hall RSVP

Post-Reality: A Prototype from the Edge | Nov 10, 7-9 PM, Interactive storytelling experiment at Film at Lincoln Center RSVP

The VC Playbook: Nailing Your Pitch, GTM, and AI Ops | Nov 11, 6-9 PM, Fireside chat on building scalable AI businesses with ff Venture Capital RSVP

💡Use AI and Keep Your Data Safe

In last episode we discussed the mentality to approach working with AI. Since then, there has been quite a few launches from big players - Google’s Upgraded AIStudio, OpenAI’s Atlas, Claude Code Skills for example. However, with all of these products, we are rushing into an era of increased risk of data exploitation. So… How we can get productivity gains while keeping our information safe?

Busting Myths: LLMs and Your Data

Many misconceptions are floating around about how large language model (LLM) tools handle your data. Let’s debunk a few key myths:

Myth 1: “Consumer models always learn from everything I type.”

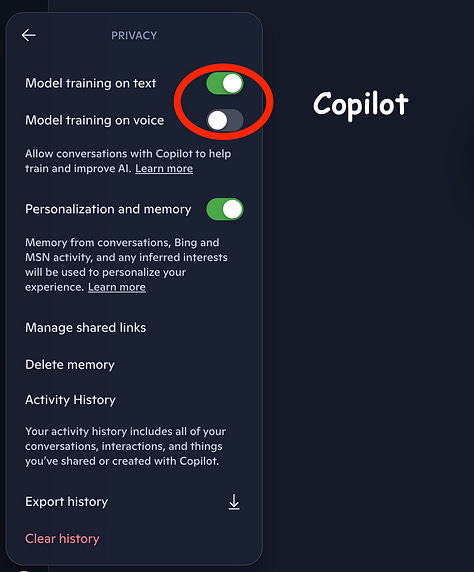

Reality: Not anymore. All major consumer AI providers—OpenAI, Anthropic, and Google—now offer explicit controls to disable model training on your conversations. For ChatGPT, you can turn off “Improve the model for everyone” in your Data Controls. For Claude, you can disable training in your Privacy Settings. For Gemini, turning off “Keep Activity” prevents your chats from being used for training. However, be aware that defaults vary. As of October 2025, Anthropic’s Claude is opt-in for training, while others may be opt-out.

Myth 2: “Privacy features make my data completely safe.”

Reality: “No training” does not mean zero retention. Providers still retain data for a period to monitor for abuse, fraud, or legal reasons. For example, OpenAI’s “Temporary Chats” are deleted after 30 days. Google Gemini saves chats for 72 hours even with activity history off. Furthermore, using plugins, browsing, or other connected tools can introduce third-party data paths with their own policies.

Myth 3: “Enterprise and API accounts have the same privacy risks as free accounts.”

Reality: This is false. Enterprise and API services from OpenAI, Anthropic, and Google Cloud operate under a fundamentally different, more protective privacy model. By default, they do not train on your business data. These services are governed by commercial terms and often come with a Data Processing Addendum (DPA), providing stronger legal and technical protections. If you are handling sensitive business data, upgrading to an enterprise or API plan is a critical step.

Your Task Today: Disable Data Sharing For Your apps

Take a look at all the AI products you use, and disable data sharing explicitly in the App settings.

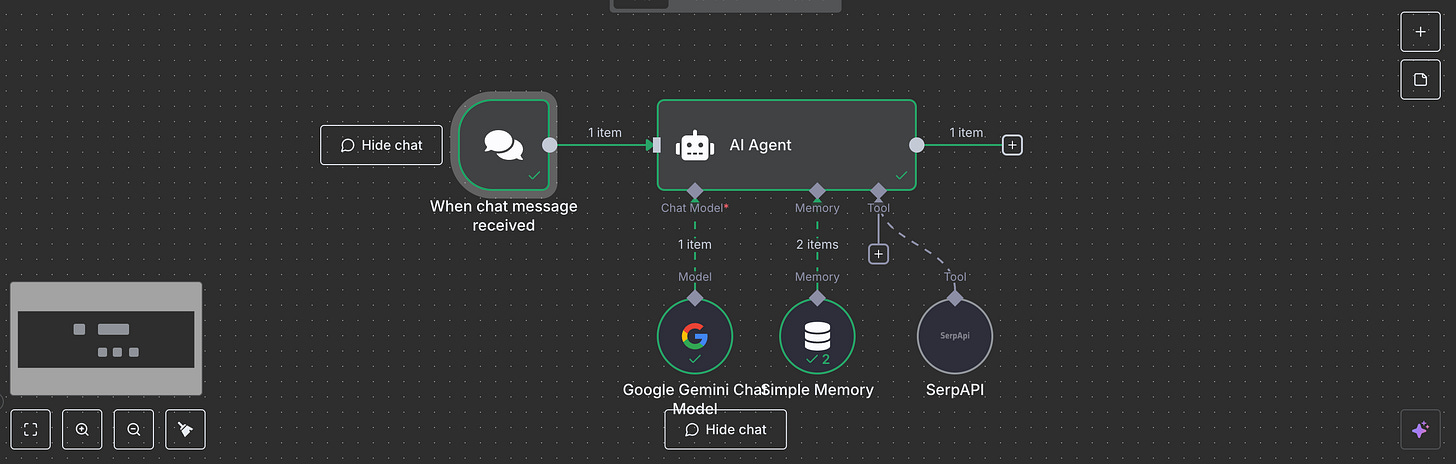

You can build an AI Agent to help you figure out how to disable data collection for AI products using n8n. Below is a small example

This agent was built on n8n, using Gemini API and Serp API for google search. This is the simplest format of an AI Agent - Chat, Model, Memory, Tool.

We have disabled the execution, meaning that your execution will NOT be saved by us. So feel free to chat & explore how to ‘safe guard your data’ here! The prompt was generated with GPT-5 Thinking Extended + Websearch. You can easily copy this and create your own n8n workflow : D

[Advanced] Safe (and Reliable) Research with AI Agents: Two Smart Approaches

Finally, let’s look at how you can harness AI as a research assistant safely. We’ll explore two scenarios: (A) using a conversational AI like ChatGPT itself to help gather information, and (B) creating your own custom “agent” with API calls. In both cases, the goal is to get the answers you need without leaking your data.

A. Researching with Public AI Product

Using public AI services (e.g. ChatGPT, Claude, Google’s Gemini) for research is safer than it might sound. Major providers state they will not train on your inputs (AFTER you opt-out). These companies may retain your chats for a short period solely for compliance/safety (OpenAI API logs auto-delete after 30 days), but essentially your content won’t be mined into their models without consent. In short, large AI platforms won’t misuse your data – and many offer enterprise plans with even stricter privacy guarantees.

So how do you actually do deep research with ChatGPT or Gemini? Here’s a brief guide:

Define Your Question and Plan: Start with a clear research question or hypothesis. Break it down if it’s complex - and don’t hesitate to use AI for this step.

Use Advanced Models & Features: Leverage models with reasoning capacity and searching grounding for literally all topics of value. Advanced tiers have bigger “brains” for reasoning and are less likely to hallucinate.

Iterative Querying – Refine as You Go: Deep Research products usually requires iterative planning and confirmation. You would want to add your clarifications and comments during this step to shape the model behavior.

Verify and Cross-Check: Always validate critical facts. AI can occasionally get things wrong or outdated. If your AI provides sources or citations, check those links to confirm the info. The goal is to use AI’s speed to gather info, but use your judgment to verify it.

Above is an example of iterating on the prompt to create a comprehensive deep research. The generated result (exported to Google doc) shows a decent amount of references for the requested topic.

B. Building a Custom Deep Research Agent

For even more control, consider building your own research agent using AI APIs. This approach lets you customize how the AI works (and even run it entirely locally), which can be both powerful and privacy-preserving.

Due to the length of the content, we can’t cover all details in this issue. We will post example of this in the community platform soon.

Single vs Multi-Agent: First, decide if you need one AI agent or many. A single-agent system means one AI model handles the entire task sequentially – it reads the question, searches for info, composes an answer, etc. This is simpler to build and works well for many cases. However, Anthropic’s research found that a multi-agent setup can supercharge complex research tasks. In their internal tests, a team-of-AIs approach (one “Lead” researcher AI directing several sub-agents in parallel) delivered 90% more output than a single Claude model alone. This divide-n-conquer technique has been used in product like Manus as well and can be re-created on your local machine.

Using an existing framework: Instead of creating DR agents on your own, you can easily leverage existing examples, such as Deep Research Agent provided by Google, Open Deep Research by LangChain, DeepResearchAgent, or Deerflow. Each of these can be easily set up locally (tip: use claude code to help you with this)!

Switching to a Local Model: What if you have ultra-sensitive data and don’t want to call any cloud API at all? The good news is you can run these agents with a local Large Language Model. Projects like Ollama make it easy to host LLMs on your own machine and expose them via an API. You can simply hook the previously developed agent, and connect those to your local API. This blog has a detailed walk through.

In summary, AI can be a powerful partner without compromising your privacy – either by trusting reputable providers who protect your data (and opt-out), or by taking the reins and hosting the intelligence yourself.

Building the future, one product at a time.

The Pioneering Minds Team